Create a Prompt

How to create, version and use a Prompt in TrainKore.

TrainKore acts as a registry of your Prompts so you can centrally manage all their versions and Logs, and evaluate and improve your AI systems.

This guide will show you how to create a Prompt in the UI or via the SDK/API.

You can create an account now by going to the Sign up page

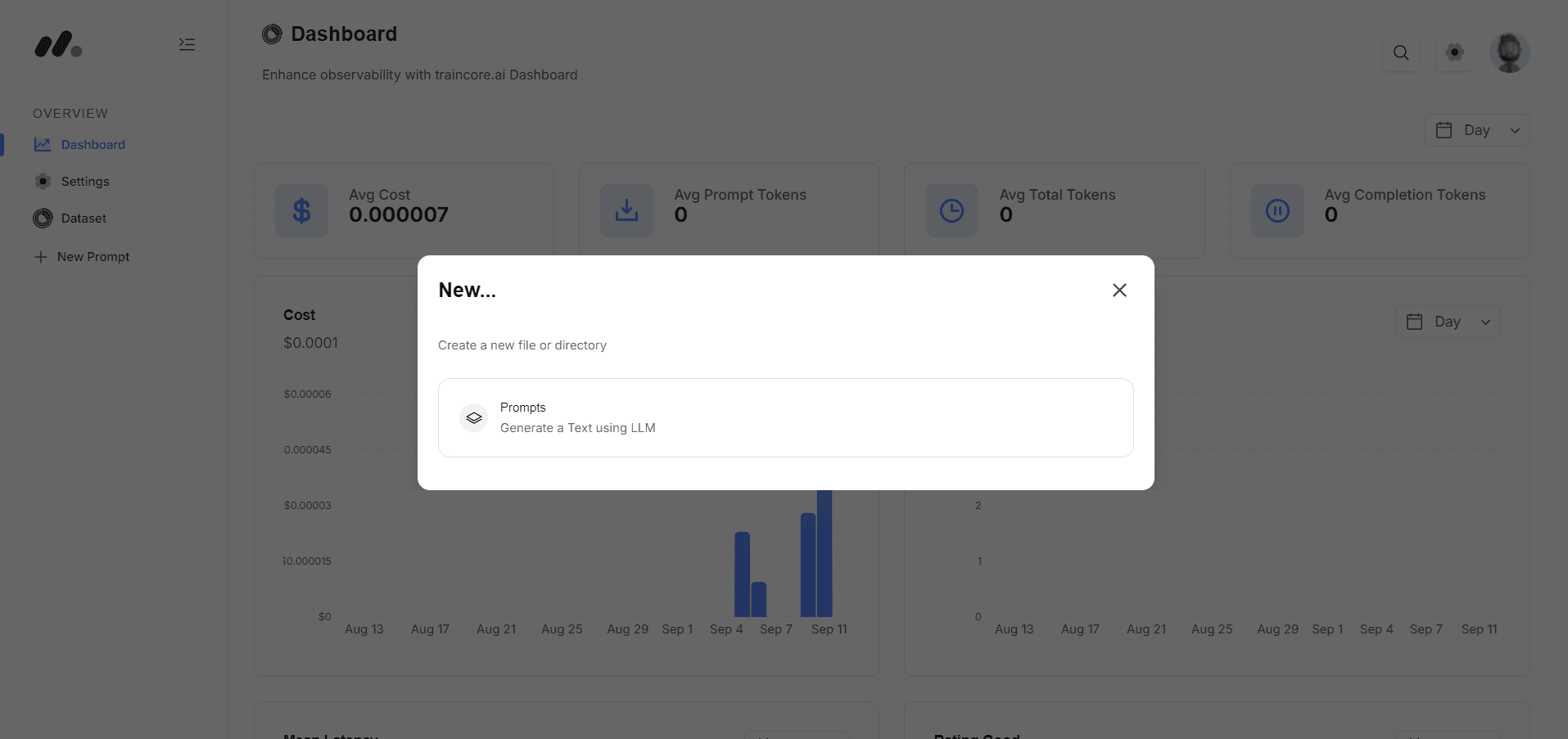

Create a Prompt File

When you first open TrainKore you’ll see your File navigation on the left. Click ‘+ New’ and create a Prompt.

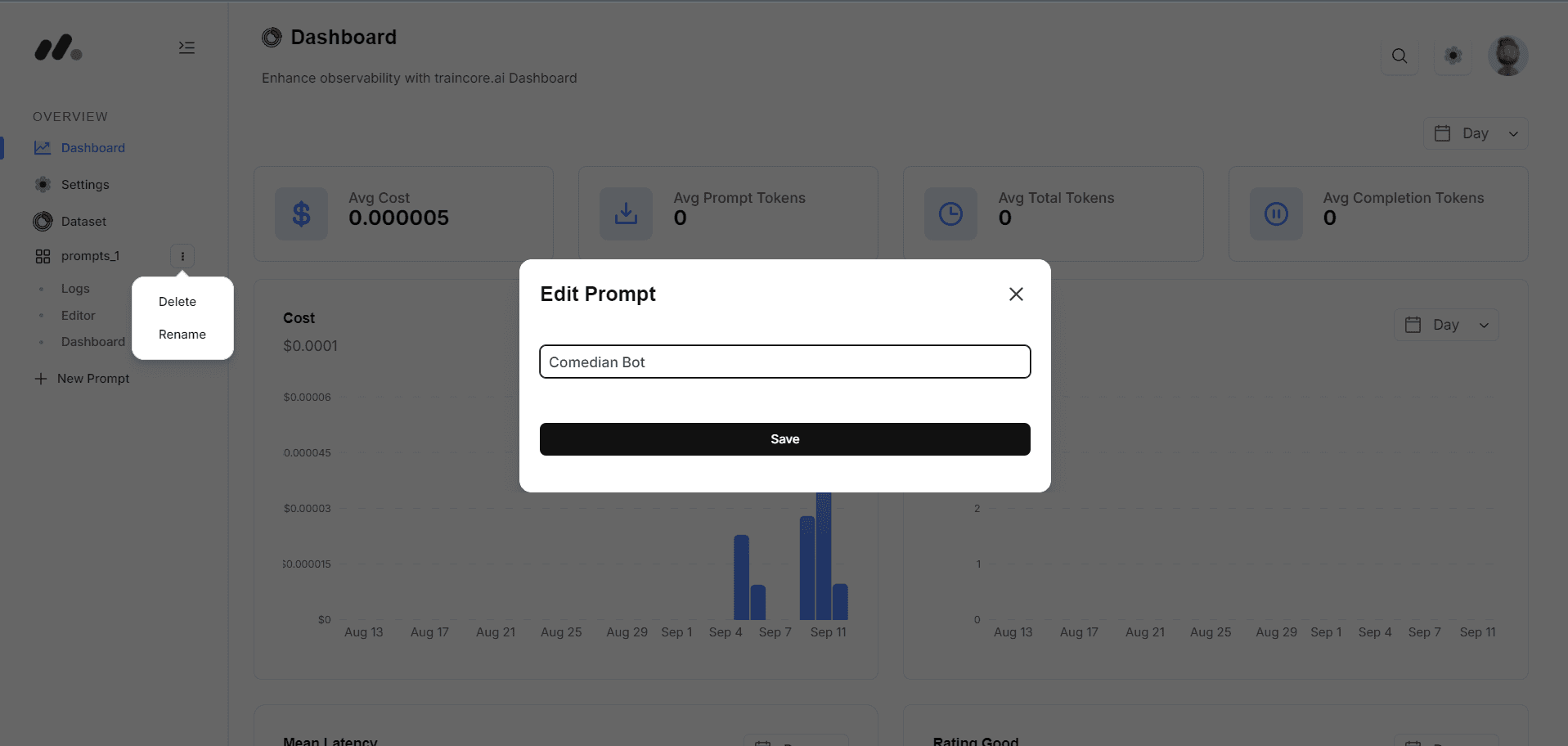

In the sidebar select rename, and rename this file to “Comedian Bot” now or later.

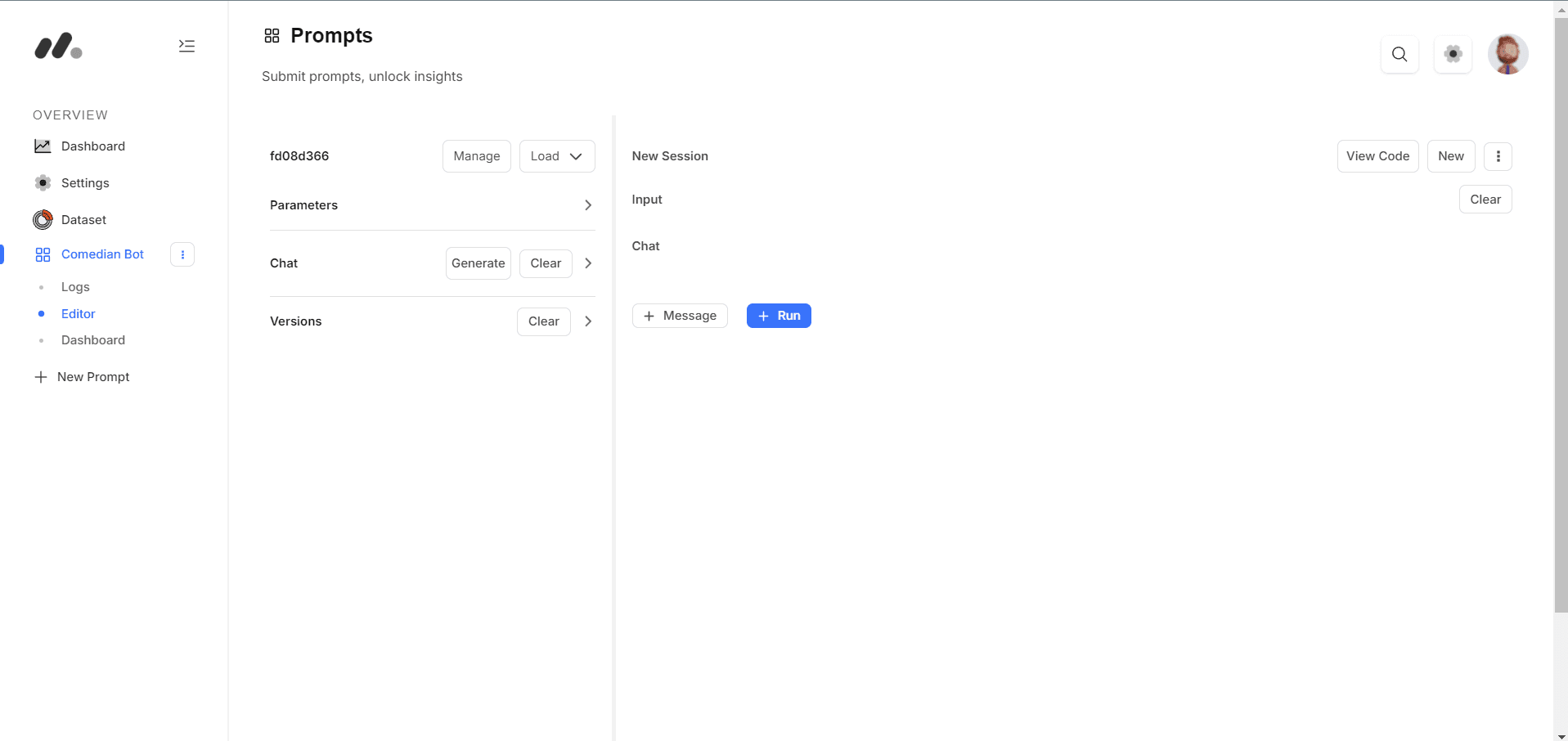

Create the Prompt template in the Editor

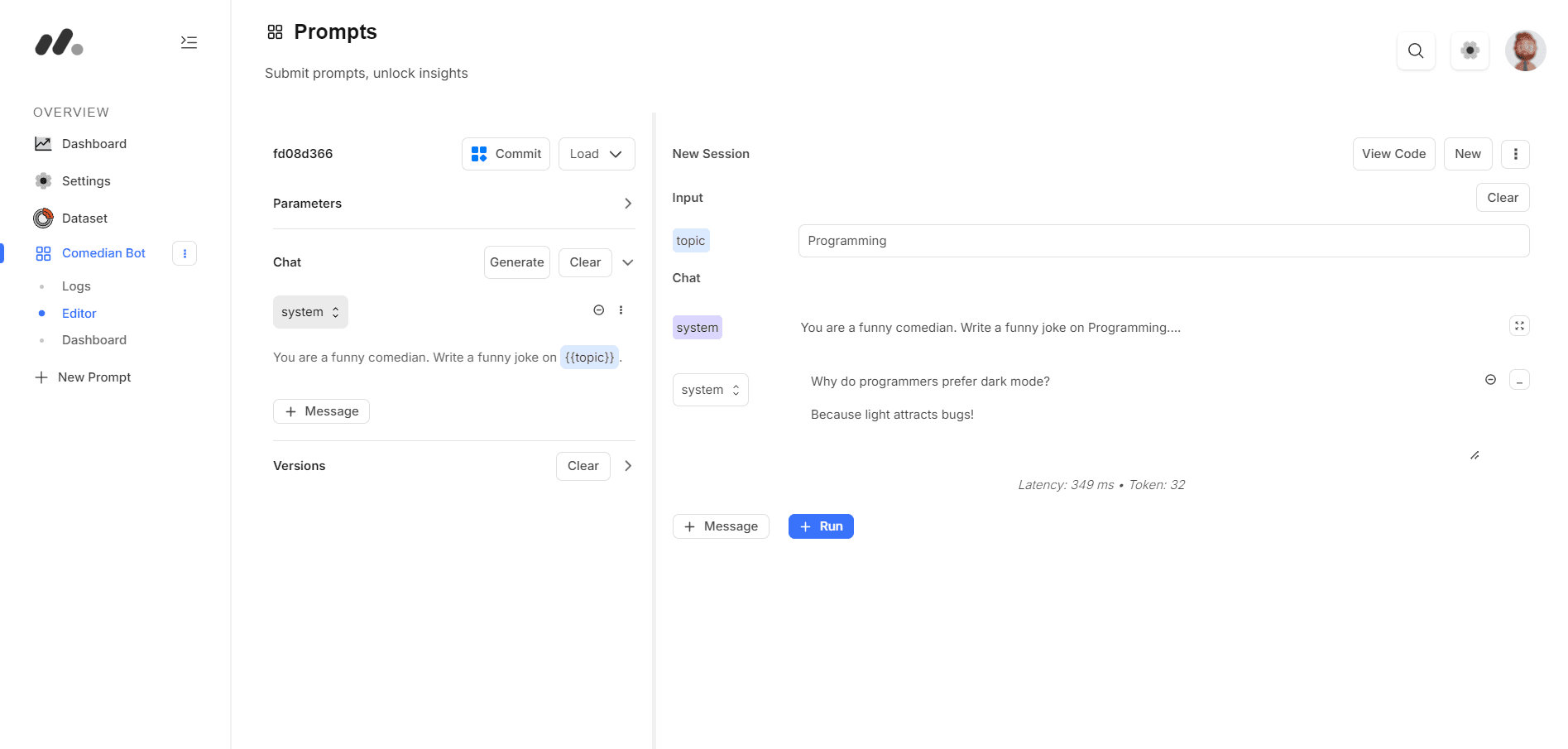

The left hand side of the screen defines your Prompt – the parameters such as model, temperature and template. The right hand side is a single chat session with this Prompt.

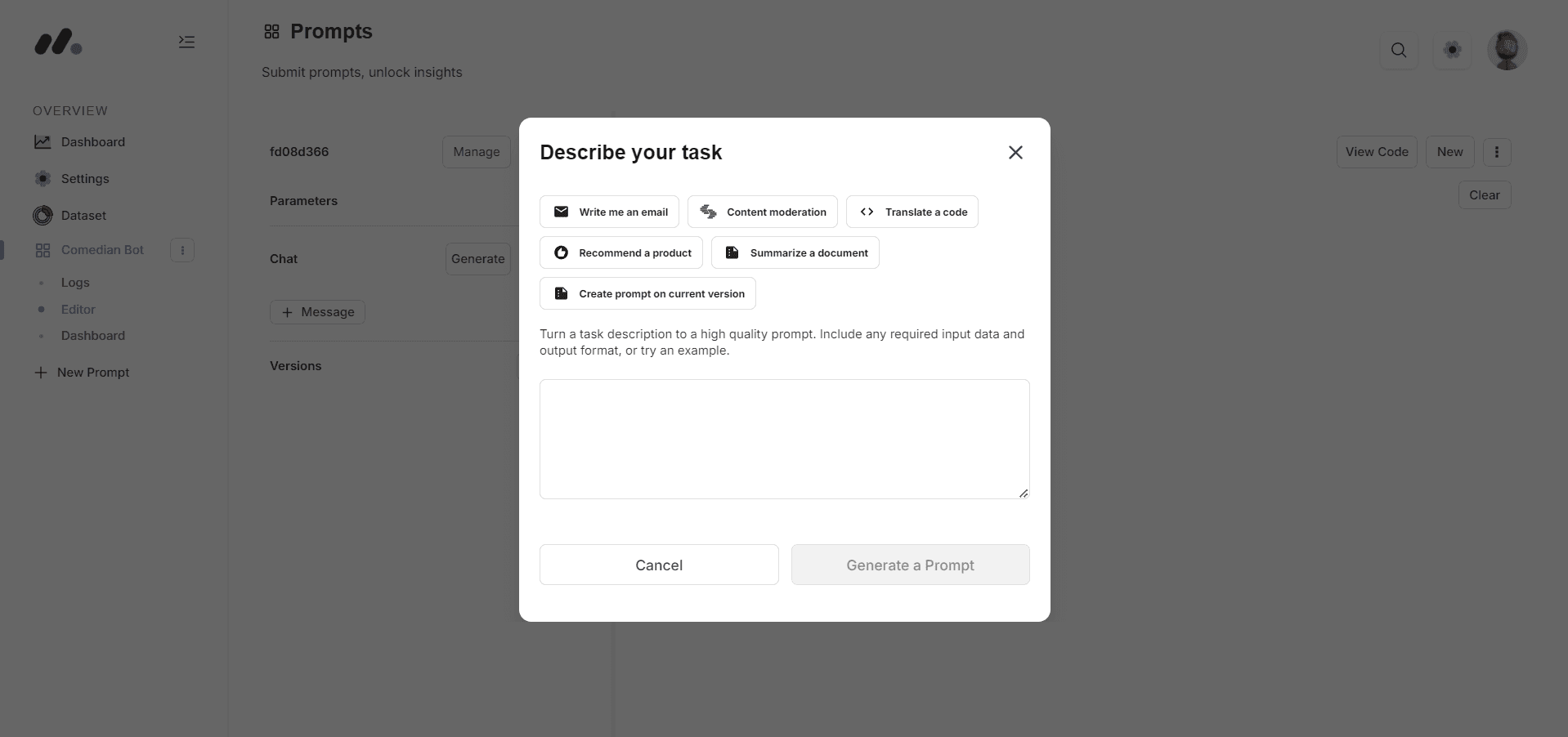

Click the “Generate” button to generate a system message to the chat template.

Click the “+ Message” button within the chat template to add a system message to the chat template.

Add the following templated message to the chat template.

You are a funny comedian. Write a joke about {{topic}}.This message forms the chat template. It has an input slot called topic (surrounded by two curly brackets) for an input value that is provided each time you call this Prompt.

On the right hand side of the page, you’ll now see a box in the Inputs section for topic.

-

Add a value for topic e.g. music, jogging, whatever

-

Click Run in the bottom right of the page

This will call OpenAI’s model and return the assistant response. Feel free to try other values, the model is very funny.

You now have a first version of your prompt that you can use.

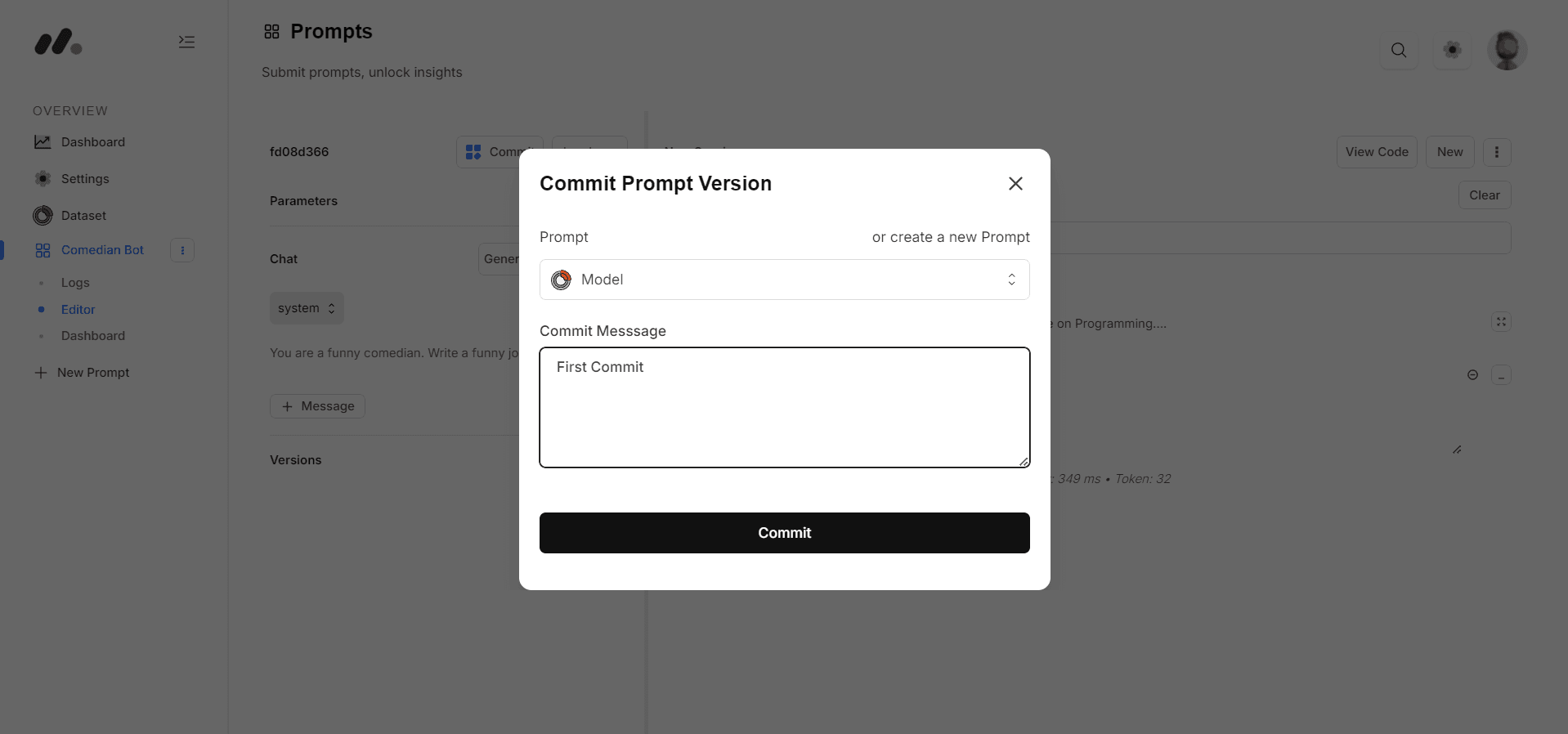

Commit your first version of this Prompt

-

Click the Commit button

-

Put “initial version” in the commit message field

-

Click Commit

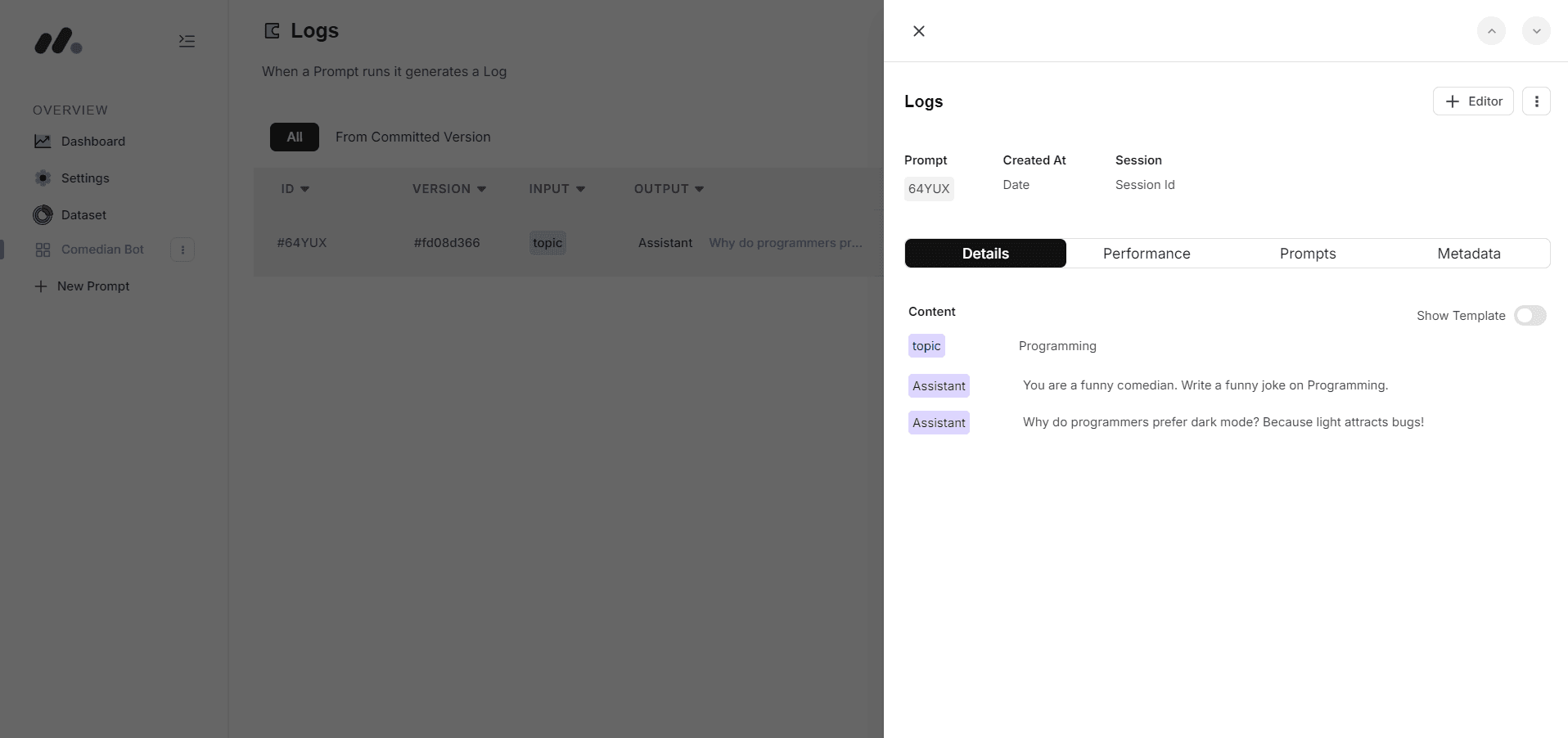

View the logs

Under the Prompt File, click ‘Logs’ to view all the generations from this Prompt

Click on a row to see the details of what version of the prompt generated it. From here you can give feedback to that generation, see performance metrics, open up this example in the Editor, or add this log to a dataset.